What grade would you give to OCR’s recent report on the curriculum and assessment?

I was horrified by Donald Trump's claim that pets are being eaten in Ohio. And mighty relieved when this was shown to be untrue. Yes, fact-checking is of great value in identifying statements that are either misleading or downright false, especially when made with such conviction by those in authority.

So here are some fact-checks relating to OCR's recent 113-page report Striking the balance: A review of 11–16 curriculum and assessment in England.

Claim

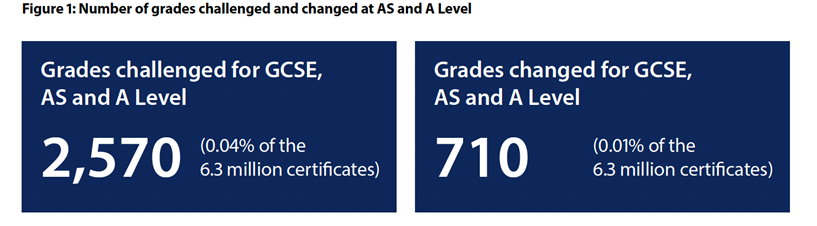

"There are checks and balances through appeals of marking, and the fact that the number of grades challenged in relation to GCSEs, A and AS levels represents 0.04% of the 6.3 million certificates issued suggests high levels of confidence in the system (see figure 1)."

Fact check

This claim, which appears on page 14, is false, three times over.

The first error is one of omission. There is no information as to when those "6.3 million certificates" were awarded. Since numbers of this this type usually refer to a single exam series, it may be something of a surprise to discover that this claim relates to the November 2022 and summer 2023 exams in England, combined.

Secondly, the number quoted, 0.04%, is wrong. The true percentage of grades challenged is more than 100 times larger, greater than 4.9%.

Thirdly, the inference that this number – whether the quoted figure of 0.04% or the true figure of 4.9% - "suggests high levels of confidence in the system" is also false.

I'll discuss that shortly – let me deal here with the numbers, as shown in the report's 'figure 1', sourced from this Ofqual website:

Fact check

The caption "Figure 1: Number of grades challenged and changed at AS and A level" has three errors. One is the absence of a date, as already discussed; the second is that the numbers refer not just to AS and A level but to GCSE too; I'll pick up the third – and most important – shortly.

Also, referring to the left-hand box, the number of grades challenged was not 2,570,0.04% of awards; for the summer 2023 exams in England alone, the number of grades challenged was 303,270, 4.9% of awards.

Furthermore, referring to the right-hand box, the number of grades changed as a result of a challenge (as inferred from the caption "Figure 1: Number of grades challenged and changed…") was not 710, 0.01% of awards. For the summer 2023 exams in England, the number was 66,205, about 1.1% of awards.

Resolving the muddle – challenges not appeals

The fundamental problem with all of this is that the both the caption and the text refer to challenges, but all the numbers refer to appeals. So if the words are right, all the numbers are wrong; alternatively, if the numbers are right, the words are wrong, as can be verified from the data tables for appeals on the Ofqual website referenced by OCR, and those for challenges, which, for the summer 2023 exams, are on a different, unreferenced, Ofqual website, here.

Those blue boxes, though, and the numbers, have been transcribed correctly by OCR from the Ofqual website. It's Ofqual's website that's wrong, muddling a text referring to "challenges" with numbers referring to "appeals".

The difference between a "challenge" and an "appeal" is as arcane as it is confusing – especially to those – such as students and parents – whose interaction with the appeals process is fleeting. But this is not the case for Ofqual and the exam boards. They know the difference. They understand the difference. And that difference is important: the numbers relating to "challenges" are about 100 times greater than the corresponding numbers for "appeals".

OCR might argue that they reproduced the two boxes from Ofqual's website in good faith. And, yes, the original errors are indeed Ofqual's.

But doesn't OCR have a duty to verify their sources, and sense-check the information in their reports? And given that they administer their own post-results services, surely they must realise that 2,570 is a ridiculously small for the number of challenges?

Claim

"…the fact that the number of grades challenged in relation to GCSEs, A and AS levels represents 0.04% of the 6.3 million certificates issued suggests high levels of confidence in the system…"

Fact check

Let me pick up a point I left unresolved earlier – the claim that "the number of grades challenged … represents 0.04% of the 6.3 million certificates issued suggests high levels of confidence in the system", or the corrected claim that "4.9% of the 6.2 million certificates issued for the summer 2023 exams in England suggests high levels of confidence in the system".

No.

Those figures – 0.04% or the correct 4.9% – are evidence of the fact that only a very few grades are challenged (perhaps as a consequence of the up-front fee), and of the unfairness of the appeals system.

The OCR report does not say "the fact that the number of grades changed in relation to GCSEs, A and AS levels represents 0.01% of the 6.3 million certificates issued suggests high levels of confidence in the system", as it might have done, by reference to the right-hand box rather than the left-hand one. And, unlike Pearson, whose website extols "99.2% of our grades were accurate on results day", OCR are not brazen enough to claim "and so 99.96% (or perhaps 99.99%) of grades are right".

But taking the statement as it appears in OCR's report, what might a reader infer? Especially when it is within a section headed "The many benefits of exams", and includes that leading word "suggests", with the subliminal hint of being "suggestive" rather than explicit.

My money is on something like "Wow! That's fantastic! Only 0.04% of grades are appealed! So the other 99.96% of grades must be right!".

OCR's statement is very carefully phrased, but my hunch is that OCR would not be too upset if a reader were to leap to the conclusion.

But to infer – whether explicitly or by implication – the reliability of grades from the numbers of grades changed following a challenge or appeal is a shameful failure of numeracy, primarily because the number of challenges is so small: for the summer 2023 exams, of the 6,171,265 grades awarded, only 303,270 were challenged (about 4.9% of the total), of which 66,205 were changed – 1.1% of the grades awarded, but 21.8% of the grades challenged. Importantly, 5,867,995 grades, 95.1% of the total, were not challenged, so no one knows how many of those would have been changed if only an appeal had been made.

Two errors of omission

OCR's report is entitled "Striking the balance: A review of 11–16 curriculum and assessment in England". So why are the most urgent and damaging aspects of current assessment – the unreliability of grades, and the unfairness of the appeals system – almost totally absent?

The sole mention that grades are unreliable is buried in a footnote on page 20, which acknowledges that grades are reliable to one grade either way at best – an alarming admission, not least to those 175,000 students, just awarded grade 3 for GCSE English, who might be wondering if they truly merited grade 4.

And other than the reference to the appeals process as already discussed, the fact that, since 2016, the appeals process has deliberately blocked the discovery of grade errors based on differences of academic opinion – even when a subject senior examiner's mark results in a higher grade – is totally ignored.

Why, in a document of 113 pages, are such important matters not examined in depth?

What grade would you give?

As an exam board, OCR sits in judgement of the work of others. In their own work, OCR should therefore display the highest standards.

On the basis of the evidence presented here, what grade would you give this report?

This article was written by Dennis Sherwood, an independent management consultant, and author of 'Missing the Mark – Why so many school exam grades are wrong, and how to get results we can trust' (Canbury Press, 2022)

Related Posts

Comments

By accepting you will be accessing a service provided by a third-party external to https://edcentral.uk/